Artificial intelligence (AI) has developed rapidly in recent years, and significant social and economic impacts are already being felt. Major companies such as Facebook and Amazon have already been implicated in data collection scandals. Machine automation raises fears no longer just about massive job losses, but also the emergence of other risks, such as potentially biased decisions by machines. This rapid and potentially invasive growth of AI raises questions about the federal government’s current and future role in overseeing its development.

The purpose of this publication is to provide a snapshot of the current AI situation, the associated risks and the future outlook.

Initially, one of the common applications of AI was the use of expert systems programmed with specific instructions to emulate human skills in solving complex problems. These systems were popular at a time when computing power and data availability were more limited.1

For the past several years, with the increase in computing power and the availability of big data, the most common application of AI has been machine learning. Instead of using logical instructions in programming to solve a problem, machine learning algorithms classify and compile data and then identify trends and subsequently provide better performance. However, huge amounts of data are needed to improve the performance of AI platforms. For example, image recognition platforms require millions of images of the same kind of object to enable recognition of it. These techniques are modelled on the human brain, where information is stored in constantly evolving neural connections.2

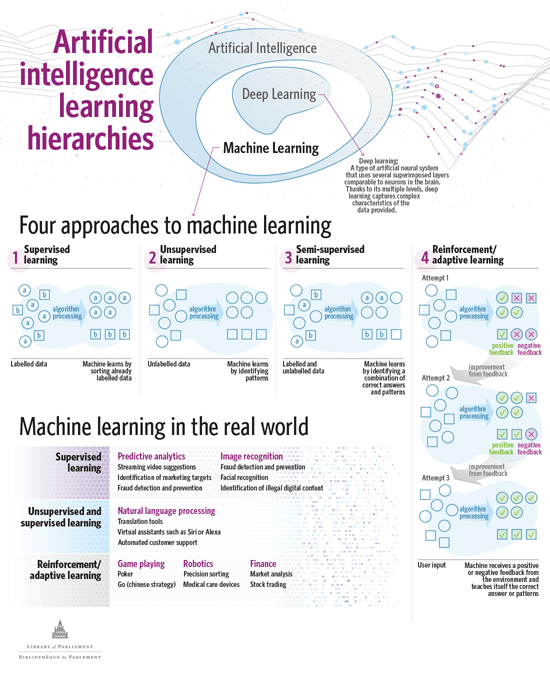

Machine learning is divided into several different groups and approaches. Supervised, unsupervised and semi-supervised learning algorithms learn from different data sets, while reinforcement learning algorithms learn through positive or negative feedback from the environment. Deep learning is a subgroup of machine learning that uses supervised, unsupervised and sometimes semi‑supervised learning.3 See Figure 1 for more information about machine learning.

Figure 1 – Machine Learning: Methods and Applications

Source: Figure prepared by the author using data obtained from Matthew Smith and Sujaya Neupane, Artificial intelligence and human development: Toward a research agenda ![]() (37.5 MB, 63 pages), International Development Research Centre, April 2018; and Brookfield Institute, Intro to AI for Policymakers: Understanding the shift

(37.5 MB, 63 pages), International Development Research Centre, April 2018; and Brookfield Institute, Intro to AI for Policymakers: Understanding the shift ![]() (931 KB, 21 pages), March 2018.

(931 KB, 21 pages), March 2018.

Advances in AI and the availability of big data enable significant decision-making processes to be automated in a variety of areas. However, this automation comes with some risk: the decision-making process for AI applications must be as transparent as possible to ensure that decisions are free of bias. As well, decision‑makers must be able to explain the reasoning behind a decision. In fact, in February 2019, the Government of Canada announced the Directive on Automated Decision-Making, which has the objective of ensuring certain principles, such as “transparency, accountability, legality, and procedural fairness,” are respected in the use of AI in the delivery of federal government services.4 However, according to the Organisation for Economic Co-operation and Development (OECD), a major challenge is that the complexity of AI algorithms makes understanding the decision‑making process difficult.5

The quality of the data used in machine-learning applications is therefore very important. However, these data are sometimes incomplete or biased as they are the result of decisions made by humans. This means that AI applications are part of both the problem and the solution. Although AI can help counter human bias, algorithms use the data provided to them. If it uses biased data, AI may exacerbate social prejudices.6 For example, the results of a prediction tool used by judges were shown to be racially biased, as the tool was based on discriminatory decisions handed down previously by judges. When it is known that there is bias, efforts can be taken to eliminate it; however, the situation is quite different in the case of unconscious bias. At the very least, it is difficult to solve a problem when there is no awareness of its existence. According to the OECD report, it is important to be aware of bias inherent in society or in certain segments and to put processes in place to counter these biases.7

Given the risks, some people may not want to be subject to decision-making processes run by automated algorithms. Some jurisdictions have taken steps to inform the public about these processes and provide them with the option of being subject to them or not. For example, within the European Union, Article 22 of the EU General Data Protection Regulation8 recognizes the right of any person not to be subject to a decision solely based on automated processing. In Canada, there does not appear to be any such right in the Personal Information Protection and Electronic Documents Act (PIPEDA),9 which applies to the collection, use and disclosure of personal information in the course of commercial activities across Canada.

Access to large amounts of data, while essential to AI development and performance, also raises many privacy issues, including ethical and security concerns. Data are collected and stored mainly by multinationals operating out of the United States. Unlike Canada with PIPEDA, however, the United States has no uniform regulatory framework for data management. As a result, data collection and storage methods do not always respect privacy in a transparent manner, which can compromise the security of the personal information of the individuals concerned.10

Some provinces have already established regulatory frameworks to protect data stored in Canada and abroad. For example, British Columbia’s Personal Information Protection Act 11 and Nova Scotia’s Personal Information International Disclosure Protection Act 12 require public bodies to keep the personal information collected within Canada and to ensure that it is not accessed from outside the country. In Ontario, under the Personal Health Information Protection Act, 2004,13 institutions (such as hospitals and pharmacies) and agents (insurance companies) that store personal health data must ensure that the selected cloud service provider complies with the guidelines set out in the Act. Similarly, in New Brunswick, the Personal Health Information Privacy and Access Act 14 requires that personal health data be stored in Canada. In Quebec, the Act respecting Access to documents held by public bodies and the Protection of personal information 15 requires that all personal information remain in Quebec, unless the third‑party cloud provider protects the data with provisions in line with those set out in the Quebec legislation.

Different methods of overseeing and protecting data collection could be considered. In its February 2018 report on PIPEDA, the Standing Committee on Access to Information, Privacy and Ethics recommended reforming PIPEDA. One major challenge involves ensuring that a new regulatory framework can adapt to technological change. Some experts add that, to be effective and to respond to international issues, oversight and protection standards are needed not only nationally, but globally as well.16

According to several experts, AI has the potential to change significantly over the next few decades. The development of AI is now at a stage called Artificial Narrow Intelligence (ANI) since it is currently at a level lower than that of human intelligence: it can solve one problem or task at a time.17 According to some experts, within about 30 to 50 years, AI will reach a stage of Artificial General Intelligence (AGI), equivalent to the level of human intelligence. At this stage, autonomous machines will have the ability to reason and make decisions. Other experts predict that AI will reach this stage in a hundred years, or even longer.18 Lastly, some believe that in several decades, AGI could hypothetically evolve toward a stage of Artificial Superintelligence, where AI would be at a level higher than that of human intelligence and could evolve on its own.19

It is difficult to predict when such change will happen, as well as the impact it will have. Nevertheless, some experts argue that the idea of AI in the form of robots threatening society is science fiction. These experts also argue that the development from ANI to AGI will take place so far in the future that it would be pointless to legislate the development of AI at this time. Moreover, they argue that it may be counterproductive to legislate a problem that does not yet exist, because such a measure may limit technological progress when it is important to stimulate and encourage innovation.20

Other experts argue that it is important to legislate and oversee the development of AI to prevent it from evolving into dangerous applications, because innovation must not come at the expense of public safety. Since it may take some time to put a regulatory framework in place, it is worth while to think about it now.21 In February 2017, the European Parliament adopted a resolution22 further to the European Commission’s recommendations concerning civil law rules on robotics. In this resolution, the European Parliament states that a gradual approach is important in order not to impede innovation, while stressing the importance of starting to study the issue of civil liability now. This may cover a variety of issues, including the legal liability for damage caused by a robot.

In February 2019, the OECD Committee on Digital Economy Policy announced that it would use the recommendations of an international group of experts to develop the first intergovernmental policy guidelines for AI at its next meeting in March 2019. This committee also plans to present draft recommendations in May 2019.23

Despite the lack of a regulatory framework in Canada, the federal government provides targeted AI funding in some sectors. For example, in its 2017 budget,24 it announced $125 million in funding over five years for its Pan‑Canadian AI Strategy, which aims to increase the number of AI researchers in Canada and to “develop global thought leadership on the economic, ethical, policy and legal implications of advances in artificial intelligence.” 25 With this funding, the federal government is able to prioritize specific sectors in order to maximize Canada’s innovation potential. However, as several experts have suggested, governments must be aware of the risks associated with AI and put tools in place to deal with them.

† Papers in the Library of Parliament’s In Brief series are short briefings on current issues. At times, they may serve as overviews, referring readers to more substantive sources published on the same topic. They are prepared by the Parliamentary Information and Research Service, which carries out research for and provides information and analysis to parliamentarians and Senate and House of Commons committees and parliamentary associations in an objective, impartial manner. [ Return to text ]

© Library of Parliament